In the realm of artificial intelligence, one innovation has dramatically changed how we interact with machines using natural language: Generative Pretrained Transformers (GPT). Developed by OpenAI, GPT represents a family of models built on the principles of deep learning, specifically tailored for Natural Language Processing (NLP). Its impact is extensive, and now covers all major industries and even our personal lives. Can you imagine your work without ChatGPT? Probably not!

The generative pre-trained transformer market is also one of the fastest-growing sectors in the world. According to a Statista report, the global GPT market is expected to be $356.10bn in 2030.

But what is a generative pre-trained transformer and how does it work? How does it impact the different areas of our lives and how can we utilize it? These are the main questions that most of us have in our minds. And that’s what we are going to answer in this blog. So, without any further ado let’s get right into it.

What Is A Generative Pre-trained Transformer?

GPT models are designed to generate coherent and contextually relevant text based on a given prompt. They leverage a type of deep learning architecture known as transformers to process and understand the complex patterns inherent in human language. They are basically, a neural network architecture that brought significant improvements over older models like RNNs and LSTMs.

The concept of “pretraining” is crucial here. GPT models are first trained on vast datasets, allowing them to learn the intricacies of language. This pretraining equips the models with a general understanding of linguistic structures, which can then be fine-tuned for specific tasks.

How GPT Works: The Transformer Architecture

The power of enterprise GPT lies in the revolutionary transformer architecture. Before transformers, traditional models like Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs) dominated NLP tasks. However, these architectures had limitations in processing long sequences of text, particularly when it came to capturing dependencies between distant words in a sentence.

Self-Attention Mechanism

Transformers address these limitations through a mechanism known as self-attention. In GPT, self-attention allows each word in a sequence to consider every other word, thereby creating a context-aware representation of the text. This is achieved using attention heads, each focusing on different parts of the sentence. For instance, in the sentence “The cat sat on the mat,” the self-attention mechanism helps the model understand that “sat” is closely associated with “cat” rather than “mat.”

This allows the model to focus on relevant parts of the input when generating predictions.

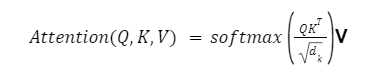

Self-attention is defined by:

Where:

Q = Queries

K = Keys

V = Values

d_k = Dimension of key vectors

Multi-Head Attention

GPT also employs multi-head attention, a process where multiple attention mechanisms work in parallel to capture different aspects of the input sequence. Each head operates independently, focusing on different parts of the sentence, and their outputs are combined for richer representations.

Encoder-Decoder Structure

While the original transformer model has an encoder-decoder structure, GPT simplifies this by using only the decoder part for text generation. The encoder is primarily used for input processing (as in models like BERT), whereas GPT, being generative, focuses on predicting the next word in a sequence.

Backpropagation and Training

GPT is trained using backpropagation, where gradients are computed for each weight in the network, and the model’s parameters are adjusted using an optimization algorithm (usually Adam). This process is repeated over multiple epochs to minimize the difference between the predicted and actual outputs, ultimately improving the model’s performance.

Training large-scale models like GPT requires vast computational resources, and parallelization techniques are often employed to speed up training. Distributed training across multiple GPUs or TPUs is a common approach, enabling the model to handle the immense number of parameters.

Evolution of Generative PreTrained Transformers (GPT-1 to GPT-4)

The evolution of generative pre-trained transformers from simple chatbots to enterprise AI has been nothing short of extraordinary. Here’s a glimpse:

GPT-1: The Beginning of Generative Pretraining

GPT-1 was introduced in 2018 with 117 million parameters. It was the first model to demonstrate that generative pretraining on large amounts of unlabeled text followed by fine-tuning on smaller, labeled datasets could produce high-quality results on various NLP tasks. GPT-1 established the foundation for transfer learning in NLP, leveraging unsupervised learning to build strong language representations.

| Model | Parameters | Notable Features |

| GPT-1 | 117M | Introduction of generative pretraining |

GPT-2: Scaling Up

Released in 2019, GPT-2 scaled up the model to 1.5 billion parameters. Its increased size allowed it to generate even more coherent and contextually relevant text. GPT-2 also introduced concerns about potential misuse, leading OpenAI to initially withhold the full model. However, its public release sparked widespread adoption and further highlighted the potential of large-scale language models.

| Model | Parameters | Notable Features |

| GPT-2 | 1.5B | Capable of generating realistic text |

GPT-3: A Quantum Leap

GPT-3 (2020) was a game-changer, with 175 billion parameters. GPT-3 showed remarkable improvements in few-shot, one-shot, and zero-shot learning, allowing it to perform tasks with minimal examples. Its ability to understand and generate language across a wide range of tasks without specific task-related fine-tuning made it a landmark achievement in AI.

| Model | Parameters | Notable Features |

| GPT-3 | 175B | Few-shot learning, robust text generation |

GPT-4: Pushing the Boundaries

GPT-4 (2023) continued the trend of scaling with more refined models and increased data. While OpenAI has not disclosed the exact number of parameters, GPT-4 is widely acknowledged for improved multimodal capabilities (e.g., image and text), enhanced coherence, and better alignment with human values and ethical considerations.

| Model | Parameters | Notable Features |

| GPT-4 | Confidential | Improved multimodal capabilitie |

Pretraining and Fine-Tuning in GPT

Pretraining: The Foundation of GPT

Pretraining is a crucial phase in the development of Generative Pretrained Transformers (GPT). It involves training the model on vast amounts of unlabeled text data to understand the underlying structure and patterns of language. This stage is completely unsupervised, meaning that the model is exposed to raw text and learns to predict the next word in a sentence by examining the context provided by the preceding words. The model does not need labels or explicit task-specific data during this phase, making it incredibly efficient at generalizing across a wide range of NLP tasks.

During pretraining, GPT learns relationships between words, sentence structures, and even deeper linguistic features such as idiomatic expressions and nuances in meaning. This is achieved through masked language modeling—a technique where the model is tasked with predicting missing words in a sentence. Over time, GPT develops a rich representation of language, capturing syntactic, semantic, and contextual information.

Pretraining involves several key components:

Tokenization: Text is broken down into tokens (words, subwords, or characters). GPT typically uses byte pair encoding (BPE) to tokenize text, enabling it to handle words it has never seen before.

Objective Function: The model is trained to minimize a cross-entropy loss function, where it learns to predict the probability distribution of the next word in a sequence given its context.

Optimization: Pretraining requires massive computational resources, typically running on high-performance hardware (e.g., GPUs, TPUs). The Adam optimizer and gradient descent are used to adjust the model’s parameters, ensuring it can learn from its errors and improve over time.

Learning Dynamics: The model undergoes multiple epochs (complete passes through the training dataset). Early epochs capture basic language rules, while later epochs refine the model’s understanding of context and meaning.

An important aspect of pretraining is that it can leverage diverse datasets from the internet, such as Common Crawl, Wikipedia, and digital libraries. This vast exposure allows GPT to develop a wide-ranging understanding of language, including rare words, technical jargon, and various languages.

Fine-Tuning: Task-Specific Specialization

After pretraining, GPT undergoes a supervised process known as fine-tuning, where it is adapted to perform specific tasks. Fine-tuning occurs on labeled datasets that are much smaller than the data used during pretraining. The pretraining process enables GPT to start with a robust understanding of general language features, but fine-tuning helps refine this understanding to solve specific problems such as sentiment analysis, text summarization, or machine translation.

Fine-tuning is a form of transfer learning, where knowledge acquired during pretraining is transferred to downstream tasks. This is particularly useful in NLP because labeled datasets for many tasks are scarce or expensive to produce. Fine-tuning adjusts the weights of the model’s parameters while retaining the general language knowledge acquired during pretraining.

Steps in fine-tuning include:

Task-Specific Data: The model is trained on a smaller, curated dataset relevant to the task at hand. For instance, a medical chatbot would be fine-tuned on medical literature and patient interaction data.

Optimization and Regularization: Fine-tuning involves careful optimization to avoid overfitting, where the model becomes too specialized to the fine-tuning dataset and loses its generalization ability. Techniques like dropout and early stopping are employed to prevent overfitting.

Learning Rate Tuning: A lower learning rate is often used during fine-tuning to avoid overwriting the general knowledge from pretraining. This ensures that fine-tuning gradually nudges the model’s parameters towards the target task.

Applications of GPT in Real-World Scenarios

GPT models have demonstrated their potential across a multitude of industries, thanks to their ability to generate coherent, contextually accurate text. These applications span from everyday tools like chatbots to more specialized domains like legal analysis and medical research.

Content Generation and Copywriting

GPT has fundamentally changed content creation, providing an automated way to produce blog posts, articles, social media content, and even more specialized content like marketing copy or technical documentation. GPT-based tools such as Jasper AI, Copy.ai, and Writesonic allow businesses to generate content at scale with minimal human intervention. Marketers, writers, and entrepreneurs use these tools to:

Generate product descriptions and promotional content.

Create SEO-optimized articles that improve search engine rankings.

Automate the creation of headlines, slogans, and ad copy.

In particular, GPT’s ability to write in different tones, styles, and formats makes it invaluable for personalizing content. For instance, a brand could instruct GPT to write in a formal tone for legal documentation or adopt a conversational style for customer emails.

Chatbots and Virtual Assistants

Virtual assistants and chatbots powered by GPT have seen widespread adoption in customer support and personal assistance. OpenAI’s ChatGPT and Microsoft’s Cortana utilize GPT to respond to user queries, often outperforming traditional rule-based chatbots. Some notable use cases include:

Customer Support: GPT can handle a range of customer queries, from providing product information to troubleshooting technical issues. It reduces response times and can manage conversations across multiple languages, thanks to its multilingual training.

Conversational AI: Virtual assistants like Siri and Alexa are increasingly integrating GPT to improve their ability to maintain context over longer conversations and offer more natural responses.

By using GPT in conversational interfaces, companies improve user engagement and satisfaction, reducing the workload on human agents. According to Straits Research, the global chatbot market size is expected to grow to $29.66 billion till 2032.

Coding and Software Development Assistance

Developers now have tools like GitHub Copilot that use GPT to assist with code generation and bug fixing. Trained on vast datasets of open-source code, these models:

Generate code snippets based on comments or partial code input.

Identify and suggest fixes for common bugs.

Offer documentation generation for new functions or APIs.

This helps developers by reducing the time spent on repetitive coding tasks and increasing productivity through automation.

Medical Applications

In healthcare, GPT is being fine-tuned on medical literature and patient data to assist in diagnosis, research, and administrative tasks. Key applications include:

Medical Chatbots: GPT can interact with patients, providing information on symptoms and treatment options, or guiding them through healthcare processes.

Clinical Research: Researchers use GPT to summarize medical papers, identify trends in the literature, and generate hypotheses based on existing data.

These applications improve the accessibility of healthcare information and reduce the burden on medical professionals by automating routine tasks.

Education and Research Tools

Educational tools powered by GPT offer students personalized tutoring and content generation. For instance:

GPT can generate tailored study materials, summarizing complex topics and providing explanations based on the user’s current knowledge level.

In research, GPT can assist academics by summarizing large volumes of papers, suggesting novel research directions, and even generating drafts of research papers.

Ethical Considerations and Limitations of GPT

As with any powerful technology, GPT raises ethical concerns, particularly in areas like bias, misinformation, and potential misuse.

Bias in AI-Generated Content

GPT models learn from data that can contain societal biases, and they may unintentionally reproduce these biases in their outputs. For instance, language models may generate stereotypical or harmful content based on biased training data. Efforts to mitigate bias include fine-tuning more diverse datasets and applying fairness constraints during training.

Misinformation and Malicious Use

The ability of GPT to generate human-like text also opens up possibilities for misuse, such as generating fake news, deepfakes, or malicious code. This has sparked discussions on responsible AI development and the importance of content moderation tools that can detect and filter harmful outputs.

Privacy Concerns

GPT models, when trained on large-scale datasets, may inadvertently memorize sensitive information like personal data or confidential records. Ensuring that training data is anonymized and adhering to privacy regulations like GDPR is critical in addressing these concerns.

Strategies for Responsible AI Use

Organizations deploying GPT models need to establish guidelines for responsible use, including content moderation, transparency in AI decision-making, and ethical AI principles that prioritize fairness, privacy, and accountability.

Future of Generative Pretrained Transformers

The future of GPT models promises further breakthroughs in AI, particularly in scaling up model parameters and improving language understanding.

GPT-5 and Beyond

The next generation of GPT models and AI, speculatively named GPT-5, promises further advancements in language understanding, scalability, and multimodal capabilities. Just look at the recent developments by Google’s project Astra. Your mind will definitely be blown away.

Scaling Up the Model

With GPT-3 at 175 billion parameters and GPT-4 speculated to have even more, the trend toward scaling model parameters continues. GPT-5 may reach or exceed trillions of parameters, unlocking unprecedented capabilities in NLP. Scaling up models allows them to learn more nuanced representations of language and better handle ambiguous or complex inputs.

Multimodal Learning

GPT-4 introduced multimodal capabilities, allowing the model to process not just text but also images. GPT-5 is expected to enhance this, possibly integrating video, audio, and even sensory data inputs. For example:

A multimodal GPT could process both text and images to generate more context-aware responses, such as generating captions for images or summarizing videos.

Applications could extend to autonomous systems, where multimodal GPTs assist in decision-making by combining textual data (e.g., reports) with visual inputs (e.g., camera footage).

Few-Shot and Zero-Shot Learning Enhancements

With few-shot and zero-shot learning, GPT models have already demonstrated the ability to perform tasks without extensive fine-tuning on task-specific data. GPT-5 is likely to improve in this area, making it more efficient at learning from minimal examples. For instance, a zero-shot learning GPT-5 might be able to:

Handle complex reasoning tasks, such as solving logic problems or answering legal queries, with little to no training data specific to the problem.

Generate domain-specific text for fields like astrophysics or law without extensive retraining, thanks to improved generalization capabilities.

Ethical Improvements and Alignment with Human Values

As GPT models become more powerful, aligning them with human values and ensuring responsible use becomes more critical. GPT-5 is expected to focus heavily on ethical AI development, including:

Bias Mitigation: New techniques may be introduced to better detect and eliminate biases in GPT’s outputs.

Human-AI Collaboration: Models may be designed to work more seamlessly alongside human operators, ensuring that AI-generated content aligns with ethical guidelines and factual accuracy.

Fine-Tuning Generative Pretrained Models for Specific Domains

While GPT models are powerful and general-purpose due to their large-scale pretraining, they can become even more effective when fine-tuned for specific domains. Fine-tuning involves retraining the pre-trained model on specialized datasets to optimize its performance in a particular field or application, allowing it to adapt to domain-specific nuances, terminology, and requirements. The ability to fine-tune GPT models makes them highly versatile and applicable across various industries, including healthcare, law, creative writing, and technical research.

Why Fine-Tuning is Necessary

The GPT architecture is trained on a vast corpus of general text data sourced from the internet, books, and various forms of public content. This gives it a broad understanding of language and general knowledge. However, for tasks that require more domain-specific expertise, such as legal document analysis or medical diagnostics, the model’s general understanding may not be sufficient. Fine-tuning allows GPT to adapt to the specific language, terminology, and problem-solving approaches of a domain.

Key reasons why fine-tuning is necessary include:

Domain-Specific Terminology: Technical fields like medicine or law have specialized vocabularies that are not always well-represented in general text corpora. Fine-tuning helps the model become familiar with this specific jargon.

Contextual Knowledge: Domains often have unique conventions and contexts that influence how information is interpreted. Fine-tuning helps GPT models become better at understanding domain-specific subtleties.

Improved Accuracy: General models may miss the precision required for specialized tasks. Fine-tuning improves the model’s accuracy by adjusting it to handle the specific characteristics of the domain data.

Steps in Fine-Tuning GPT Models

The fine-tuning process can be broken down into several key steps:

Data Collection: The first step involves collecting domain-specific datasets. For instance, fine-tuning a medical GPT might involve curating a dataset from medical journals, patient records, clinical guidelines, and textbooks.

Preprocessing the Data: The collected data must be preprocessed to remove irrelevant content, normalize text, and ensure that it’s compatible with the tokenization process used by GPT. For certain domains, the text may also need to be labeled for specific tasks like sentiment classification, diagnosis prediction, or question answering.

Transfer Learning Setup: Fine-tuning is essentially a transfer learning task. The GPT model, pretrained on large generic datasets, is further trained on the smaller, domain-specific dataset. During this phase, the learning rate is adjusted to be lower than that used in pretraining to avoid overfitting to the smaller dataset and to preserve the general knowledge learned during pretraining.

Optimization and Regularization: It is crucial to fine-tune GPT carefully to prevent overfitting, which could cause the model to become too specialized and lose its ability to generalize beyond the fine-tuning dataset. Techniques such as early stopping, dropout, and regularization help maintain the balance between specialization and generalization.

Evaluation and Iteration: Once the model has been fine-tuned, it is tested on a validation dataset specific to the domain. If the model performs well, it is ready for deployment. If not, further adjustments to hyperparameters or dataset refinement may be necessary.

Examples of Fine-Tuning GPT in Specific Domains

Legal Domain

In the legal field, GPT models can be fine-tuned to assist with legal research, contract analysis, and the generation of legal documentation. Legal GPT models are trained on corpora that include court rulings, statutes, legal opinions, and contracts. Fine-tuning GPT for legal tasks ensures that it understands complex legal language, precedent-based reasoning, and the hierarchical structure of legal arguments.Case Study: Legal professionals can use a fine-tuned GPT to review contracts for specific clauses, identify potential legal risks, or generate legal opinions based on prior cases and legislation. Fine-tuned models like LegalBERT and ContractNLP have been developed for these specialized tasks.

Medical Diagnostics

GPT models fine-tuned on medical literature, clinical records, and research papers can assist healthcare professionals in generating diagnostic reports, summarizing patient information, and even suggesting treatment plans. The medical domain requires a high level of precision and an understanding of medical terms, conditions, and procedures, which can be effectively addressed through fine-tuning.Case Study: Fine-tuned models like MedGPT can help physicians by providing automated summaries of patient histories, suggesting possible diagnoses, or analyzing the latest medical research to inform treatment decisions.

Creative Writing and Art

In the realm of creative industries, GPT can be fine-tuned to produce poetry, screenplays, or even interactive narratives. By training the model on specific genres or authors, the GPT model can replicate unique writing styles and structures.Case Study: AI-generated storytelling applications like AI Dungeon or custom screenwriting tools can fine-tune GPT models on specific genres (e.g., horror, sci-fi) or adapt them to the stylistic nuances of a particular author or filmmaker.

Financial Analysis

GPT fine-tuned for finance can analyze earnings reports, market trends, and financial statements to assist analysts with making informed decisions. Financial models are trained on datasets that include market data, financial news, and corporate filings.Case Study: A fine-tuned financial GPT could generate stock market reports, summarize quarterly earnings, or even generate potential investment strategies based on historical market trends.

Comparing GPT to Other Language Models (BERT, T5, etc.)

While GPT is a dominant player in the NLP space, it is not the only state-of-the-art language model. Several other models, such as BERT (Bidirectional Encoder Representations from Transformers), T5 (Text-to-Text Transfer Transformer), and XLNet, have been developed with different architectures and goals in mind. Understanding the differences between these models can help clarify GPT’s unique strengths and weaknesses in various applications.

GPT vs. BERT

BERT, introduced by Google in 2018, is one of the most influential language models in NLP, particularly for tasks that require deep understanding of text such as question-answering and sentiment analysis. BERT is fundamentally different from GPT in terms of its architecture and training objectives.

Training Objective: BERT uses masked language modeling (MLM), where the model predicts missing words in a sentence by looking at both the preceding and succeeding context. This makes BERT a bidirectional model, meaning it takes into account information from both sides of a token when predicting its meaning. GPT, in contrast, is unidirectional, as it only considers past tokens (left-to-right) when generating text.

Architecture: BERT’s bidirectional nature gives it an edge in understanding context, which is especially beneficial for tasks like named entity recognition (NER) or semantic similarity. GPT, on the other hand, excels at text generation tasks due to its autoregressive nature, where each word is predicted sequentially.

| Model | Training Objective | Best Use Cases | Advantages |

| GPT | Unidirectional (autoregressive) | Text generation, few-shot learning | Coherent, long-text generation |

| BERT | Bidirectional (masked modeling) | Sentiment analysis, question answering | Deep context understanding |

Use Case Comparison:

GPT: Ideal for tasks that involve generating coherent and creative text, such as long-form writing, chatbots, or story generation.

BERT: Better suited for tasks that require full context understanding, such as reading comprehension, question-answering, and classification tasks.

GPT vs. T5

T5 (Text-to-Text Transfer Transformer), introduced by Google, is another advanced model designed to treat all NLP tasks as text-to-text problems. T5 is unique in that it reformulates all tasks—whether summarization, translation, classification, or question answering—as generating text based on input text.

Training Objective: T5 is pretrained using a denoising autoencoder approach, where parts of the input text are corrupted, and the model learns to reconstruct the original text. Unlike GPT, which is trained autoregressively (predicting the next token), T5’s training aligns more with a text generation objective but is highly flexible across tasks.

Task Flexibility: T5’s text-to-text framework is highly versatile, as any NLP task can be reformulated as a text generation task. For instance, for classification tasks, T5 generates a text label as an output. This makes T5 adaptable across a wide range of problems, offering flexibility that even GPT lacks.

| Model | Training Objective | Best Use Cases | Advantages |

| GPT | Unidirectional (autoregressive) | Text generation, few-shot learning | Coherent, long-text generation |

| T5 | Denoising autoencoder (text-to-text) | Multi-task learning (summarization, translation) | Adaptable to multiple task formats |

Use Case Comparison:

GPT: Primarily excels in generating fluent, coherent text without requiring specialized task-specific fine-tuning.

T5: Ideal for multi-task learning, where a single model can be fine-tuned to perform various NLP tasks, from translation to summarization, using its flexible text-to-text format.

GPT vs. XLNet

XLNet is another language model that aims to address the limitations of both GPT and BERT. It incorporates autoregressive and bidirectional learning by using a permutation-based approach to model all possible word orders in a sentence. This helps XLNet achieve better performance in both generative tasks (like GPT) and comprehension tasks (like BERT).

Training Objective: XLNet improves upon BERT’s MLM by generating all possible permutations of a sequence and modeling dependencies in different ways. This gives it a more robust understanding of sentence structure than GPT or BERT.

Architecture: XLNet retains the autoregressive advantages of GPT, allowing for text generation, while also gaining the bidirectional context modeling seen in BERT. This makes XLNet a hybrid model, capable of excelling in both generative and understanding tasks.

| Model | Training Objective | Best Use Cases | Advantages |

| GPT | Unidirectional (autoregressive) | Text generation, few-shot learning | Coherent, long-text generation |

| XLNet | Permutation-based bidirectional autoregressive | Comprehension and generation tasks | Hybrid model, capturing bidirectional context |

Use Case Comparison:

GPT: Best for creative tasks requiring long-form text generation and general-purpose language models.

XLNet: Offers strong performance in both understanding and generation tasks due to its hybrid architecture, making it ideal for complex language tasks that require both.

GPT vs. Other Models: Summary of Strengths and Weaknesses

While GPT excels in generative tasks due to its autoregressive nature, models like BERT and T5 offer strengths in tasks that require a deeper understanding of context or multitasking capabilities. The following table summarizes the comparison:

| Model | Best Use Cases | Strengths | Limitations |

| GPT | Text generation, creative writing | Coherent, fluent, and long-text generation | Lacks bidirectional context understanding |

| BERT | Sentiment analysis, NER, QA | Bidirectional context understanding | Not suitable for text generation |

| T5 | Multi-task learning | Flexible, handles multiple NLP tasks | More complex to fine-tune |

| XLNet | Comprehension and generation | Hybrid approach, handles context well | More computationally expensive |

Latest Generative Pre-Trained Transformer News

The models, like OpenAI’s GPT-4 and Microsoft’s Copilot, are transforming the ways we interact with technology, offering unprecedented capabilities in content generation, code writing, and complex problem-solving. Here’s a roundup of the latest developments in generative pre-trained transformer technology as of 2024.

Microsoft’s Copilot Updates for Microsoft 365

Microsoft has been leveraging AI to enhance its suite of productivity tools. In July 2024, Microsoft announced significant updates to its Copilot for Microsoft 365, incorporating advanced generative AI features into familiar applications like Word, Excel, Outlook, and Teams. Here are some key highlights:

Excel Data Insights: Copilot in Excel is now equipped with even more powerful analytical capabilities. Users can quickly gain insights from complex datasets, identify trends, and even generate visual representations like charts and graphs. This update means that even those with minimal experience in data analysis can harness the power of AI to make data-driven decisions.

Enhanced Meeting Summaries in Teams: Microsoft 365 Copilot has introduced enhanced meeting summaries within Microsoft Teams. It uses AI to compile key points, track actions, and create a concise meeting recap. This feature is a game-changer for businesses looking to streamline communications and ensure that nothing is missed during meetings.

Personalized Assistance in Outlook: The new Copilot updates in Outlook allow users to craft more effective and personalized emails. It offers suggestions for language improvements, automatically composes replies, and summarizes lengthy threads, which saves time and ensures clearer communication.

Microsoft Word and PowerPoint Upgrades: In Microsoft Word, Copilot now provides more robust writing suggestions, helping users to fine-tune their content. Meanwhile, PowerPoint, it assists in creating visually appealing presentations by suggesting layouts and even generating slides from brief textual inputs.

These updates signal Microsoft’s dedication to integrating GPT technology seamlessly into everyday work, helping professionals become more productive and efficient.

OpenAI’s New Models and Developer Tools

OpenAI has also been making headlines with its announcements at DevDay 2024. The organization, which pioneered the GPT models, revealed a slew of new models and developer tools designed to push the boundaries of AI capabilities. Here’s a glimpse of the latest from OpenAI:

New Model Releases: OpenAI introduced the next generation of its GPT models, which promise to be more powerful, accurate, and adaptable. These new models build upon the success of GPT-4, delivering improved language comprehension, faster processing, and the ability to handle more nuanced prompts.

Developer Tools for Integration: OpenAI’s latest announcement focuses on making it easier for developers to incorporate GPT technology into their own applications. With enhanced APIs and toolkits, developers can now customize and deploy generative AI solutions tailored to their specific business needs. This initiative underscores OpenAI’s goal of democratizing access to AI and fostering innovation across industries.

Emphasis on Safety and Ethics: OpenAI continues to prioritize the ethical use of AI, incorporating more robust safeguards in its new models. These enhancements include better moderation tools and improved methods to identify and mitigate potential misuse, ensuring that AI remains a tool for positive impact.

Conclusion

In summary, GPT models have pushed the boundaries of what AI can achieve in natural language understanding and generation. As these models continue to evolve, they promise to bring about even more profound changes in the way we interact with and leverage AI in everyday tasks, from content creation to complex decision-making processes.

If you want to develop customer enterprise AI GPT models for your organization, then contact our Microsoft-certified experts at Al Rafay Consulting.